So, this week we're returning to something mentioned in Part II, but which as you'll see made its biggest impact in the decade covered by Part III. This post was a lot of fun to write, including the assistance of our very own Polish Eagle, who I'd like to thank for his advice on Grumman and Long Island history and activities. And thus, without further ado, let us consider these ancient words:

"What goes up must come down."

"Once rockets are up, who cares where they come down? That's not my department says Werner Von Braun."

Meditate upon this wisdom we will.

Eyes Turned Skyward, Part III: Post #4

The end of Apollo had resulted in abrupt changes for almost every NASA supplier, major and minor. Only a few, like Rockwell (manufacturer of the Command and Service Module), were able to weather it without serious changes. Some, like Boeing and McDonnell, managed to spin their losses of large Saturn V contracts into other contracts like Saturn IC’s first and second stages, remaining critical parts of the post-Apollo programs. However, others suffered from harder times. The best example, representative of the hundreds of smaller contractors, was Grumman Aerospace Corporation. Smaller than most of the contractors who had vied for a piece of Apollo, the company had nonetheless managed through hard work to seize the lunar module contract and then worked to make that vehicle one of the most reliable and successful of the program. With the beginning of the station program, though, the funding squeeze NASA was passing through made continued lunar surface operations, let alone the development of any of the many proposed expanded operations variants, financially impossible, leading to the quick termination of Grumman’s Lunar Module contract. Moreover, Grumman had hoped to perhaps leverage its aerospace experience into bidding on the NASA Space Shuttle program. When that program, too, fell to the budget axe during the refocus on stations, Grumman was left completely adrift. Even the company’s successful history of naval fighters was up in the air, as ongoing issues with the company’s F-14 Tomcat were straining its relationship with the Department of Defense.

The Hubble Space Telescope provided one of the only outlets for the company’s successes in the 70s, with its 1979 selection as lead contractor for the spacecraft portion of the vehicle (a joint venture of Kodak Eastman and Itek would provide the optical train, including the main mirror). Grumman had long had experience with the OAO series of solar observatories and limited involvement with the Skylab Apollo Telescope Mount, which it had leaned on heavily in a “bet the company” move to save its space division. Luckily, the gamble paid off, and though the program was not without problems (Grumman could not escape its history of rather chaotic program startups, nor the overhanging threat of budget cuts that loomed over all of NASA for the early 1980s), Grumman’s space division had managed to weather the 1980s, and the flawless start to Hubble operations reflected well on the company in spite of a series of development problems. Moreover (at long last), the F-14s problems had largely settled down, and the fighter’s performance had finally started to ease some of the tensions on Grumman’s relationship with the Department of Defense. The benefit of this was that Grumman was able to reach out for another high-profile program, something of a return to form.

Under the auspices of Reagan’s Strategic Defense Initiative Organization, the Department of Defense was calling for the development of the necessary cheap spacelift capability through the development of two prototype spacecraft. One, the X-30, was to be a “spaceplane” of the classic mold, featuring advanced scramjet engines to carry it to altitudes and speeds high and fast enough to nearly put it in orbit. The other, the X-40, was a vertical-takeoff-and-landing vehicle testing a simpler reusable vehicle along the lines of existing stages. The X-30 received a larger focus by most contractors, as it promised a large contract with extensive development. However, Grumman, with its legacy of vertical rocket landings on Apollo and a leaner, hungrier eye, cannily put its focus on the less attractive prize, reasoning that it would have a better chance with a maximum-effort proposal for the X-40 than with the X-30. This approach paid off, and Grumman was selected to design, build, and operate the X-40 in coordination with SDIO and the Air Force. While the new experience of working with hydrogen and cryogenic fuels took the usual Grumman learning curve, the headaches were overshadowed by the much larger hassles that the X-30 developers were encountering during the extensive basic research needed to even begin detailed design. After design work on the X-40 concluded in 1987, the construction and associated initial qualifications began. While the main engineering would happen at Grumman’s Bethpage, Long Island headquarters as well as subsystem assembly such as avionics, fuel systems, and shrouds, the final assembly and some of the larger titanium work would take place at the Calverton plant established for Tomcat production. In line with conventional flight test protocol, the program was to involve the construction of two complete airframes and a complete set of flight spares. In 1990, fresh off yet another review of the lack of significant progress with the X-30’s advanced engines and headaches with finding suitable thermal protection systems, SDIO officials arrived for the Customer Acceptance Readiness Review on the first spaceframe of what Grumman had internally nicknamed the “Starcat.” As with most such handovers, the list of open faults was extensive, but many were largely perfunctory, and by the end of nearly two full days of reviews, all had been accepted or closed. Finally, the first of the two X-40 “Starcat”s was carefully wrapped up in plastic and loaded onto one of the same Super Guppies that had once carried Lunar Modules for its journey to White Sands Missile Range, leaving its twin to take over its place on the final assembly stands.

Under the New Mexico sun, support hardware had already been prepared, and once Starcat Alpha arrived, work began to check out the fueling and support equipment. Since one of the intentions of the X-40 program was to test simplification of launch operations, the site was fairly primitive, with a single hangar/checkout building, a control trailer, and two basic concrete launch/landing pads for the vehicle separated by 500 meters for planned testing of horizontal translation in-flight. To eliminate the need for a launch mount, the X-40 would take off from its own retractable landing gear, and was intended to be serviced on the pad with a simple scissor lift or cherry-picker crane truck, as opposed to a dedicated service tower. April 1990 saw the first static test firing of the X-40s engines, with the four clustered RL-10 engines at low-throttle settings insufficient to lift off. A week of further review of the data was conducted, then, with nerves running high, the X-40 once again lit off, and made its first free flight. Under the command of onboard computers, the Starcat lifted to a height of several hundred feet, hovered, then descended to land safely. Onlookers marveled at the smooth takeoff and landing—“Just like Buck Rogers,” one was heard to remark. It was an ambitious start, but the testing would only get more challenging. The envelope was pushed once again on the second flight in May, which was intended to test the entire duration of the X-40’s design goals. Reaching an apogee of roughly 3 km and spending around 140 seconds in the air, Starcat Alpha demonstrated that it was everything the X-40 program demanded it be.

The next flights got increasingly ambitious, spaced weekly to allow full review of data from each. Flight three was the first to translate in flight, moving 150 feet off the pad center, then diverting back to land once again, a feat flight four repeated. Flight five was intended to demonstrate the ability to “stick the landing,” the program’s internal jargon for a landing where instead of settling slowly down with a thrust-to-weight ratio of less than one, the vehicle would instead simply nearly shut down its engines and fall towards the pad. At the precisely calculated moment, the engines would flare to full power, and decelerate the vehicle to a stop precisely as it reached the pad. By making a faster landing, the “sticking” method would allow more fuel-efficient landings, preserving more of the vehicle’s capability for the aerial acrobatics planned to test its aerodynamic and thruster flight controls. However, while almost all went well in the flight, the moment the engines picked to reignite was not entirely correct, and the vehicle was still moving at slightly less than 8.3 m/s when its footpads made contact with the ground. The legs’ hydraulics could not fully absorb the shock, and instead pre-designed crumple points in the legs and structure absorbed the blow. These points were designed as sacrificial, permanently deforming to save the rest of the structure. Nevertheless, the post-flight inspections and repairs Starcat Alpha would require to verify that the system had indeed protected the vehicle’s key systems from damage would exceed the capabilities of the White Sands facility. X-40/01 would have to be returned to the manufacturing facility at Calverton for repairs and inspection. Fortunately, Starcat Bravo was completing checkout, allowing the program to resume—or, at least, for investigations of the causes of the failure to be carried out in parallel with repairs to the damaged spaceframe. The same Super Guppy that carried X-40/01 back to Calverton in late June returned bearing X-40/02. Starcat Bravo became the target for inspections of the avionics, in parallel with experiments with the “Iron Bird” version of the software in servers on the ground at Bethpage’s engineering headquarters. The investigations discovered that there had been a mis-calibration in the conversion of the X-40s computers from the flight software for the conventional landings to that needed for the “stuck” landings, which had led to the IMU “drifting”: failing to correctly correlate data from onboard GPS and radar systems, overestimating its altitude during the ascent, and thus thinking it was further from the ground than it actually was. If the software’s vision had matched reality, the vehicle could have touched down gently—it just happened that the real ground had interfered a bit less than 15 feet above where the vehicle thought the ground was. The software was corrected and Starcat Bravo made its first flight in August, successfully demonstrating the “stuck” landing.

At roughly the same time back on Long Island, the inspection of damage to Alpha concluded—the sacrificial legs and crumple zones had functioned better than predicted, and vehicle X-40/01 turned out to have sustained almost no serious damage in spite of maximum deceleration exceeding 20 Gs. One of the engineering team joked that in light of landing (mostly) safely in spite of the G-load, “Add a tail hook, and the damn thing would almost be carrier qualified.” In the morning, the repair engineering review team returned to find that second-shift workers had improvised the missing equipment out of cardboard and aluminum foil, and fitted it with tape to the vehicle, along with a paper Navy roundel. It was a reflection of the high morale of the project—they had solved a major hurdle, and were moving forward in spite of it. Alpha had survived and was beginning rework; meanwhile, the second flight of X-40/02 (the sixth of the program overall), continued to push the envelope, combining a translation in-flight with a stuck landing on the same pad. The success was the first preparation for the next major challenge—testing rapid turnaround. On the next flight, taking place in early September, Starcat Bravo lifted off, pointed its nose east, and translated to the second pad, touching down safely. Overnight and all morning and afternoon, engineers and technicians converged on the vehicle. Just before sunset, the vehicle lifted off again having demonstrated a 28-hour turnaround, returning once more to its original pad. However, in the rapid turnaround, a fuel line on Number Three engine had been opened for purging but then improperly sealed as the task was handed over to another technician. In flight, leaks from the purge point let the engine bay fill with hydrogen gas, which ignited from exhaust backblast from the pad as the vehicle touched down. Even as the vehicle settled onto the pad, the inspection panels of the engine bay blew out from the resulting explosion. Testing was halted for the year, and the vehicle had to return to Calverton to take up its place in the repair/assembly bay that Starcat Alpha, fully repaired, had vacated only the week before.

Unfortunately, X-40/02’s damage was much more severe than the more minor issues suffered by Alpha. The fireball inside the engine bay had charred wiring harnesses, blown out insulation, deformed panels, and completely incinerated the management computers on each of the engines. They would have to be removed and returned to Rocketdyne for repair and recertification, while the Grumman team tore the entire lower vehicle apart searching out the extent of the flame’s damage. At the same time, the engineering staff and DARPA were carrying out a thorough review of the X-40 program’s goals, pacing, and handling procedures along with the staff from White Sands, who were brought back to Bethpage. Suntans were not the only things they brought with them—complaints about the ground support equipment, funding, staffing requirements, and cavalier expectations from Bethpage about flight rates were aired, and it wasn’t just the weather around the Bethpage plant that was frosty all winter. However, with the spring, work at New Mexico had begun to rectify some of the worst complaints, and Grumman’s Calverton staff was able to offer some good news: the certification that X-40/02’s frame was not permanently damaged, nor would its engines require more than an overhaul. Starcat Alpha’s engine bay was retrofitted to try to avoid a repeat of the incident, and then it was packaged and shipped to White Sands.

The 1991 testing campaign had a more successful beginning than the previous year. Between April and mid-June, Starcat Alpha made a total of five successful flights, bringing the program total to 14 flights in less than a year and a half—close to what Grumman’s cost analysis indicated could be break-even for a reusable first stage, and in spite of the two major failures. On the fifth flight, though, one landing leg failed to lock in place during deployment, and the vehicle toppled. Fortunately, the Grumman “build them durable” tradition and the review of potential combustion hazards the previous winter made it nothing more than an embarrassment, and the vehicle was just sidelined in the hangar for inspection. After ten months of teardown, inspection, overhaul, and reintegration, X-40/02 was once again shipped from Long Island to White Sands in July to take up the slack, marking the first instance of both vehicles being present at the test site. The twin Starcats only shared a hangar for a few days, though, before Bravo was towed out and erected on the pad for its first flight since the engine bay explosion. A full static fire of the engines was conducted and then on July 3rd, X-40/02 once again took to the sky. With its successful flight, the program moved to examining the so-called “swan dive” necessary to put the aerodynamic controls into use, demonstrating the vehicle’s ability to pitch over its nose far enough to bring the control surfaces to bear, then rotate once more vertical before landing on propulsion. The first swan dive flight was over the primary pad, only demonstrating the ability to pitch over into and out of the correct attitude, but the second in August once again translated to the secondary pad in a “swoop” controlled only aerodynamically by the fins before pulling the nose up vertically to land. However, the flight revealed some issues in the aerodynamic control sequences that were less than graceful, and the vehicle was lifted off its gear and towed back to the hangar to join Alpha while Bethpage engineers reworked the control code, a process that ended up taking the rest of the year as aerodynamic models were re-checked in wind tunnels and primitive CFD.

In February 1992, the test program began again, this time with X-40/01 bearing the results of a winter of code overhauls at Bethpage uploaded into its computers. The flight demonstrated transition into and then once more out of the swan dive attitude three times in the second-longest flight of the program (only slightly shorter than Alpha’s second flight, which had demonstrated the maximum design duration of the vehicle’s flight capability). However, circumstances caught up with the vehicle—a small crack in one of the inner lamina of the composite aeroshell was stressed by the unusually strong heating of the extended flight, and as the heat on it was cycled as the vehicle nosed into swan dive and then out again, the crack grew. During the next flight, which repeated the August flight of Bravo to the auxiliary pad on aerodynamic controls, the crack reached a critical length, having compromised a portion of the aeroshell near the Number Two engine access port. On touchdown, the shock was enough to shed loose a portion of the aeroshell about a foot square. Both vehicles were returned to Calverton. X-40/01’s entire aeroshell was removed and inspected, then replace from spares, while X-40/02’s was removed, found to be intact, and reinstalled. Both Bethpage and White Sands teams took advantage of the stand-down to incorporate overhauls to the vehicles and support systems which lead to an early end to testing for the year.

By 1993, Starcat operations had become fairly routine: X-40/02 was shipped to White Sands and made four flights, expanding the swan dive’s use and successfully demonstrating the rapid turnaround originally attempted three years before. However, on the fourth flight, it suffered a leak in the oxygen tank which lead to a small fire onboard the vehicle during descent. In spite of the nominal landing, the premature termination of the 1992 season caused by Alpha’s aeroshell lead to Bravo being shipped back to Long Island for thorough inspection. The issue was traced to an inadequate weld in the liquid oxygen tank which through a combination of thermal and mechanical stresses had opened a pinpoint leak. The entire weld was redone, while X-40/01, checked and identified as clear of the issue, was shipped to White Sands to pick up the program. However, during the mid-June thirteenth flight of the airframe and the 24th of the program overall, Starcat Alpha’s Number One engine suffered a partial failure, forcing it to abort the nominal mission and go for an early landing. With both vehicles temporarily out of commission, the program’s goals were examined—almost every objective the testing had set out to perform had been completed, essentially exhausting the potential of the Starcat design. Any further testing would likely require design of a new, larger vehicle closer to the program concept’s fully reusable first stage--an expense which the post-Cold War (and rapidly contracting) SDIO could not afford to fund. Moreover, there had been a major change at Grumman headquarters in 1992 which affected the desire to continue with the program.

Grumman’s finances had always been shaky, essentially living from contract to contract, and the discontinuation of production of the F-14 Tomcat had put the company’s future into doubt. While they felt they had good odds of securing some of the contracts in Project Constellation, one or two space contracts couldn’t keep the entire company afloat without some of the fighter contracts the company had always relied on. When the company’s designs were not selected as a finalist for the Advanced Tactical Fighter competition, the company management began to consider if it might be necessary to seek a merger with another company to survive in the post-Cold War market. In fact, their experience was highly desired by another company who had failed in the Advanced Tactical Fighter contest, losing out to the eventual winner, the Northrop F-23. For decades, Boeing had been an outside competitor for Air Force and Navy fighter and bomber contracts, hoping to expand from its traditional strengths of transport and commercial aircraft into the lucrative arena of combat aircraft. Despite its success with legends like the B-17, B-29, B-47, and B-52, however, and the potential of designs such as the XF8B, Boeing had had little success in winning such contracts, failing time and time again to break into the market. Once again, with the Advanced Tactical Fighter, Boeing had stumbled. With only one other fighter competition, the Joint Strike Fighter, on the near horizon, Boeing was determined to do whatever it took to secure the contract. Grumman’s history in fighter, especially naval fighter, design, offered a significant chance to gain experienced and talented engineering staff to contribute to the forthcoming JSF competition, while its recent experience with Starcat offered opportunities in another, unexpected, arena. Grumman’s non-aviation businesses were also potentially valuable assets, whether they were sold to provide cash or retained for ongoing profit. After considering the total possible value of Grumman to their future, Boeing made an attractive merger offer in late 1992, which Grumman’s management considered carefully, and eventually accepted.

Thus, in 1993 when Starcat’s future was being debated, it was by a team under new management and with altered goals. While throughout ’91 and ’92, Grumman engineers had been studying potential applications of Starcat, including high-altitude hops with the current vehicles with higher-efficiency flight profiles, the potential for adding a small (perhaps also reusable) upper stage to boost research payloads above the Von Karman line, and/or developing the always-intended larger derivative and operating it commercially, Boeing was more interested in making use of the Starcat team’s experience for gaining the Constellation lander contract, and thus did not fight hard to counter SDIO’s intention to terminate the program. Some of the team saw the lunar contract bid and potential to return to Grumman’s spaceflight roots as an intriguing challenge, and were happy to accept the transfer. However, some of the core Starcat devotees both in engineering and operations were put off by what they saw as abandonment of a design of tremendous potential. Several key members of the team thus left Grumman behind in search of others who might be interested in following the trail that Starcat had blazed. In the shutdown, the airframes (which were technically Air Force property) were reclaimed. Starcat Alpha eventually took up residence in the Smithsonian, while Starcat Bravo was transported to Wright-Patterson Air Force Base in Dayton, Ohio and placed on display in the Research and Development Hangar of the National Museum of the United States Air Force. After years warehoused against further disposition, the remaining flight spares and portions of the damaged Alpha aeroshell were acquired by the Cradle of Aviation Museum on Long Island, where (in association with some volunteers from the Starcat team) they were assembled with dummy replica RL-10s to create a display replica, the so-called “Starcat Gamma.”

"What goes up must come down."

"Once rockets are up, who cares where they come down? That's not my department says Werner Von Braun."

Meditate upon this wisdom we will.

Eyes Turned Skyward, Part III: Post #4

The end of Apollo had resulted in abrupt changes for almost every NASA supplier, major and minor. Only a few, like Rockwell (manufacturer of the Command and Service Module), were able to weather it without serious changes. Some, like Boeing and McDonnell, managed to spin their losses of large Saturn V contracts into other contracts like Saturn IC’s first and second stages, remaining critical parts of the post-Apollo programs. However, others suffered from harder times. The best example, representative of the hundreds of smaller contractors, was Grumman Aerospace Corporation. Smaller than most of the contractors who had vied for a piece of Apollo, the company had nonetheless managed through hard work to seize the lunar module contract and then worked to make that vehicle one of the most reliable and successful of the program. With the beginning of the station program, though, the funding squeeze NASA was passing through made continued lunar surface operations, let alone the development of any of the many proposed expanded operations variants, financially impossible, leading to the quick termination of Grumman’s Lunar Module contract. Moreover, Grumman had hoped to perhaps leverage its aerospace experience into bidding on the NASA Space Shuttle program. When that program, too, fell to the budget axe during the refocus on stations, Grumman was left completely adrift. Even the company’s successful history of naval fighters was up in the air, as ongoing issues with the company’s F-14 Tomcat were straining its relationship with the Department of Defense.

The Hubble Space Telescope provided one of the only outlets for the company’s successes in the 70s, with its 1979 selection as lead contractor for the spacecraft portion of the vehicle (a joint venture of Kodak Eastman and Itek would provide the optical train, including the main mirror). Grumman had long had experience with the OAO series of solar observatories and limited involvement with the Skylab Apollo Telescope Mount, which it had leaned on heavily in a “bet the company” move to save its space division. Luckily, the gamble paid off, and though the program was not without problems (Grumman could not escape its history of rather chaotic program startups, nor the overhanging threat of budget cuts that loomed over all of NASA for the early 1980s), Grumman’s space division had managed to weather the 1980s, and the flawless start to Hubble operations reflected well on the company in spite of a series of development problems. Moreover (at long last), the F-14s problems had largely settled down, and the fighter’s performance had finally started to ease some of the tensions on Grumman’s relationship with the Department of Defense. The benefit of this was that Grumman was able to reach out for another high-profile program, something of a return to form.

Under the auspices of Reagan’s Strategic Defense Initiative Organization, the Department of Defense was calling for the development of the necessary cheap spacelift capability through the development of two prototype spacecraft. One, the X-30, was to be a “spaceplane” of the classic mold, featuring advanced scramjet engines to carry it to altitudes and speeds high and fast enough to nearly put it in orbit. The other, the X-40, was a vertical-takeoff-and-landing vehicle testing a simpler reusable vehicle along the lines of existing stages. The X-30 received a larger focus by most contractors, as it promised a large contract with extensive development. However, Grumman, with its legacy of vertical rocket landings on Apollo and a leaner, hungrier eye, cannily put its focus on the less attractive prize, reasoning that it would have a better chance with a maximum-effort proposal for the X-40 than with the X-30. This approach paid off, and Grumman was selected to design, build, and operate the X-40 in coordination with SDIO and the Air Force. While the new experience of working with hydrogen and cryogenic fuels took the usual Grumman learning curve, the headaches were overshadowed by the much larger hassles that the X-30 developers were encountering during the extensive basic research needed to even begin detailed design. After design work on the X-40 concluded in 1987, the construction and associated initial qualifications began. While the main engineering would happen at Grumman’s Bethpage, Long Island headquarters as well as subsystem assembly such as avionics, fuel systems, and shrouds, the final assembly and some of the larger titanium work would take place at the Calverton plant established for Tomcat production. In line with conventional flight test protocol, the program was to involve the construction of two complete airframes and a complete set of flight spares. In 1990, fresh off yet another review of the lack of significant progress with the X-30’s advanced engines and headaches with finding suitable thermal protection systems, SDIO officials arrived for the Customer Acceptance Readiness Review on the first spaceframe of what Grumman had internally nicknamed the “Starcat.” As with most such handovers, the list of open faults was extensive, but many were largely perfunctory, and by the end of nearly two full days of reviews, all had been accepted or closed. Finally, the first of the two X-40 “Starcat”s was carefully wrapped up in plastic and loaded onto one of the same Super Guppies that had once carried Lunar Modules for its journey to White Sands Missile Range, leaving its twin to take over its place on the final assembly stands.

Under the New Mexico sun, support hardware had already been prepared, and once Starcat Alpha arrived, work began to check out the fueling and support equipment. Since one of the intentions of the X-40 program was to test simplification of launch operations, the site was fairly primitive, with a single hangar/checkout building, a control trailer, and two basic concrete launch/landing pads for the vehicle separated by 500 meters for planned testing of horizontal translation in-flight. To eliminate the need for a launch mount, the X-40 would take off from its own retractable landing gear, and was intended to be serviced on the pad with a simple scissor lift or cherry-picker crane truck, as opposed to a dedicated service tower. April 1990 saw the first static test firing of the X-40s engines, with the four clustered RL-10 engines at low-throttle settings insufficient to lift off. A week of further review of the data was conducted, then, with nerves running high, the X-40 once again lit off, and made its first free flight. Under the command of onboard computers, the Starcat lifted to a height of several hundred feet, hovered, then descended to land safely. Onlookers marveled at the smooth takeoff and landing—“Just like Buck Rogers,” one was heard to remark. It was an ambitious start, but the testing would only get more challenging. The envelope was pushed once again on the second flight in May, which was intended to test the entire duration of the X-40’s design goals. Reaching an apogee of roughly 3 km and spending around 140 seconds in the air, Starcat Alpha demonstrated that it was everything the X-40 program demanded it be.

The next flights got increasingly ambitious, spaced weekly to allow full review of data from each. Flight three was the first to translate in flight, moving 150 feet off the pad center, then diverting back to land once again, a feat flight four repeated. Flight five was intended to demonstrate the ability to “stick the landing,” the program’s internal jargon for a landing where instead of settling slowly down with a thrust-to-weight ratio of less than one, the vehicle would instead simply nearly shut down its engines and fall towards the pad. At the precisely calculated moment, the engines would flare to full power, and decelerate the vehicle to a stop precisely as it reached the pad. By making a faster landing, the “sticking” method would allow more fuel-efficient landings, preserving more of the vehicle’s capability for the aerial acrobatics planned to test its aerodynamic and thruster flight controls. However, while almost all went well in the flight, the moment the engines picked to reignite was not entirely correct, and the vehicle was still moving at slightly less than 8.3 m/s when its footpads made contact with the ground. The legs’ hydraulics could not fully absorb the shock, and instead pre-designed crumple points in the legs and structure absorbed the blow. These points were designed as sacrificial, permanently deforming to save the rest of the structure. Nevertheless, the post-flight inspections and repairs Starcat Alpha would require to verify that the system had indeed protected the vehicle’s key systems from damage would exceed the capabilities of the White Sands facility. X-40/01 would have to be returned to the manufacturing facility at Calverton for repairs and inspection. Fortunately, Starcat Bravo was completing checkout, allowing the program to resume—or, at least, for investigations of the causes of the failure to be carried out in parallel with repairs to the damaged spaceframe. The same Super Guppy that carried X-40/01 back to Calverton in late June returned bearing X-40/02. Starcat Bravo became the target for inspections of the avionics, in parallel with experiments with the “Iron Bird” version of the software in servers on the ground at Bethpage’s engineering headquarters. The investigations discovered that there had been a mis-calibration in the conversion of the X-40s computers from the flight software for the conventional landings to that needed for the “stuck” landings, which had led to the IMU “drifting”: failing to correctly correlate data from onboard GPS and radar systems, overestimating its altitude during the ascent, and thus thinking it was further from the ground than it actually was. If the software’s vision had matched reality, the vehicle could have touched down gently—it just happened that the real ground had interfered a bit less than 15 feet above where the vehicle thought the ground was. The software was corrected and Starcat Bravo made its first flight in August, successfully demonstrating the “stuck” landing.

At roughly the same time back on Long Island, the inspection of damage to Alpha concluded—the sacrificial legs and crumple zones had functioned better than predicted, and vehicle X-40/01 turned out to have sustained almost no serious damage in spite of maximum deceleration exceeding 20 Gs. One of the engineering team joked that in light of landing (mostly) safely in spite of the G-load, “Add a tail hook, and the damn thing would almost be carrier qualified.” In the morning, the repair engineering review team returned to find that second-shift workers had improvised the missing equipment out of cardboard and aluminum foil, and fitted it with tape to the vehicle, along with a paper Navy roundel. It was a reflection of the high morale of the project—they had solved a major hurdle, and were moving forward in spite of it. Alpha had survived and was beginning rework; meanwhile, the second flight of X-40/02 (the sixth of the program overall), continued to push the envelope, combining a translation in-flight with a stuck landing on the same pad. The success was the first preparation for the next major challenge—testing rapid turnaround. On the next flight, taking place in early September, Starcat Bravo lifted off, pointed its nose east, and translated to the second pad, touching down safely. Overnight and all morning and afternoon, engineers and technicians converged on the vehicle. Just before sunset, the vehicle lifted off again having demonstrated a 28-hour turnaround, returning once more to its original pad. However, in the rapid turnaround, a fuel line on Number Three engine had been opened for purging but then improperly sealed as the task was handed over to another technician. In flight, leaks from the purge point let the engine bay fill with hydrogen gas, which ignited from exhaust backblast from the pad as the vehicle touched down. Even as the vehicle settled onto the pad, the inspection panels of the engine bay blew out from the resulting explosion. Testing was halted for the year, and the vehicle had to return to Calverton to take up its place in the repair/assembly bay that Starcat Alpha, fully repaired, had vacated only the week before.

Unfortunately, X-40/02’s damage was much more severe than the more minor issues suffered by Alpha. The fireball inside the engine bay had charred wiring harnesses, blown out insulation, deformed panels, and completely incinerated the management computers on each of the engines. They would have to be removed and returned to Rocketdyne for repair and recertification, while the Grumman team tore the entire lower vehicle apart searching out the extent of the flame’s damage. At the same time, the engineering staff and DARPA were carrying out a thorough review of the X-40 program’s goals, pacing, and handling procedures along with the staff from White Sands, who were brought back to Bethpage. Suntans were not the only things they brought with them—complaints about the ground support equipment, funding, staffing requirements, and cavalier expectations from Bethpage about flight rates were aired, and it wasn’t just the weather around the Bethpage plant that was frosty all winter. However, with the spring, work at New Mexico had begun to rectify some of the worst complaints, and Grumman’s Calverton staff was able to offer some good news: the certification that X-40/02’s frame was not permanently damaged, nor would its engines require more than an overhaul. Starcat Alpha’s engine bay was retrofitted to try to avoid a repeat of the incident, and then it was packaged and shipped to White Sands.

The 1991 testing campaign had a more successful beginning than the previous year. Between April and mid-June, Starcat Alpha made a total of five successful flights, bringing the program total to 14 flights in less than a year and a half—close to what Grumman’s cost analysis indicated could be break-even for a reusable first stage, and in spite of the two major failures. On the fifth flight, though, one landing leg failed to lock in place during deployment, and the vehicle toppled. Fortunately, the Grumman “build them durable” tradition and the review of potential combustion hazards the previous winter made it nothing more than an embarrassment, and the vehicle was just sidelined in the hangar for inspection. After ten months of teardown, inspection, overhaul, and reintegration, X-40/02 was once again shipped from Long Island to White Sands in July to take up the slack, marking the first instance of both vehicles being present at the test site. The twin Starcats only shared a hangar for a few days, though, before Bravo was towed out and erected on the pad for its first flight since the engine bay explosion. A full static fire of the engines was conducted and then on July 3rd, X-40/02 once again took to the sky. With its successful flight, the program moved to examining the so-called “swan dive” necessary to put the aerodynamic controls into use, demonstrating the vehicle’s ability to pitch over its nose far enough to bring the control surfaces to bear, then rotate once more vertical before landing on propulsion. The first swan dive flight was over the primary pad, only demonstrating the ability to pitch over into and out of the correct attitude, but the second in August once again translated to the secondary pad in a “swoop” controlled only aerodynamically by the fins before pulling the nose up vertically to land. However, the flight revealed some issues in the aerodynamic control sequences that were less than graceful, and the vehicle was lifted off its gear and towed back to the hangar to join Alpha while Bethpage engineers reworked the control code, a process that ended up taking the rest of the year as aerodynamic models were re-checked in wind tunnels and primitive CFD.

In February 1992, the test program began again, this time with X-40/01 bearing the results of a winter of code overhauls at Bethpage uploaded into its computers. The flight demonstrated transition into and then once more out of the swan dive attitude three times in the second-longest flight of the program (only slightly shorter than Alpha’s second flight, which had demonstrated the maximum design duration of the vehicle’s flight capability). However, circumstances caught up with the vehicle—a small crack in one of the inner lamina of the composite aeroshell was stressed by the unusually strong heating of the extended flight, and as the heat on it was cycled as the vehicle nosed into swan dive and then out again, the crack grew. During the next flight, which repeated the August flight of Bravo to the auxiliary pad on aerodynamic controls, the crack reached a critical length, having compromised a portion of the aeroshell near the Number Two engine access port. On touchdown, the shock was enough to shed loose a portion of the aeroshell about a foot square. Both vehicles were returned to Calverton. X-40/01’s entire aeroshell was removed and inspected, then replace from spares, while X-40/02’s was removed, found to be intact, and reinstalled. Both Bethpage and White Sands teams took advantage of the stand-down to incorporate overhauls to the vehicles and support systems which lead to an early end to testing for the year.

By 1993, Starcat operations had become fairly routine: X-40/02 was shipped to White Sands and made four flights, expanding the swan dive’s use and successfully demonstrating the rapid turnaround originally attempted three years before. However, on the fourth flight, it suffered a leak in the oxygen tank which lead to a small fire onboard the vehicle during descent. In spite of the nominal landing, the premature termination of the 1992 season caused by Alpha’s aeroshell lead to Bravo being shipped back to Long Island for thorough inspection. The issue was traced to an inadequate weld in the liquid oxygen tank which through a combination of thermal and mechanical stresses had opened a pinpoint leak. The entire weld was redone, while X-40/01, checked and identified as clear of the issue, was shipped to White Sands to pick up the program. However, during the mid-June thirteenth flight of the airframe and the 24th of the program overall, Starcat Alpha’s Number One engine suffered a partial failure, forcing it to abort the nominal mission and go for an early landing. With both vehicles temporarily out of commission, the program’s goals were examined—almost every objective the testing had set out to perform had been completed, essentially exhausting the potential of the Starcat design. Any further testing would likely require design of a new, larger vehicle closer to the program concept’s fully reusable first stage--an expense which the post-Cold War (and rapidly contracting) SDIO could not afford to fund. Moreover, there had been a major change at Grumman headquarters in 1992 which affected the desire to continue with the program.

Grumman’s finances had always been shaky, essentially living from contract to contract, and the discontinuation of production of the F-14 Tomcat had put the company’s future into doubt. While they felt they had good odds of securing some of the contracts in Project Constellation, one or two space contracts couldn’t keep the entire company afloat without some of the fighter contracts the company had always relied on. When the company’s designs were not selected as a finalist for the Advanced Tactical Fighter competition, the company management began to consider if it might be necessary to seek a merger with another company to survive in the post-Cold War market. In fact, their experience was highly desired by another company who had failed in the Advanced Tactical Fighter contest, losing out to the eventual winner, the Northrop F-23. For decades, Boeing had been an outside competitor for Air Force and Navy fighter and bomber contracts, hoping to expand from its traditional strengths of transport and commercial aircraft into the lucrative arena of combat aircraft. Despite its success with legends like the B-17, B-29, B-47, and B-52, however, and the potential of designs such as the XF8B, Boeing had had little success in winning such contracts, failing time and time again to break into the market. Once again, with the Advanced Tactical Fighter, Boeing had stumbled. With only one other fighter competition, the Joint Strike Fighter, on the near horizon, Boeing was determined to do whatever it took to secure the contract. Grumman’s history in fighter, especially naval fighter, design, offered a significant chance to gain experienced and talented engineering staff to contribute to the forthcoming JSF competition, while its recent experience with Starcat offered opportunities in another, unexpected, arena. Grumman’s non-aviation businesses were also potentially valuable assets, whether they were sold to provide cash or retained for ongoing profit. After considering the total possible value of Grumman to their future, Boeing made an attractive merger offer in late 1992, which Grumman’s management considered carefully, and eventually accepted.

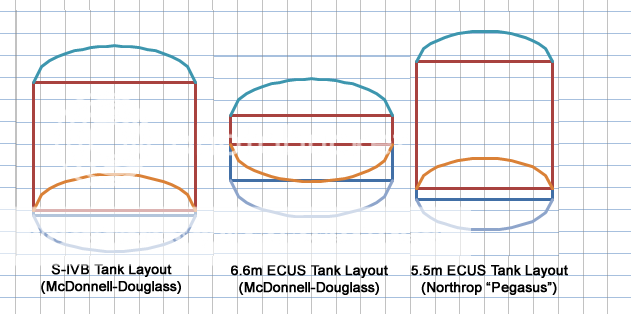

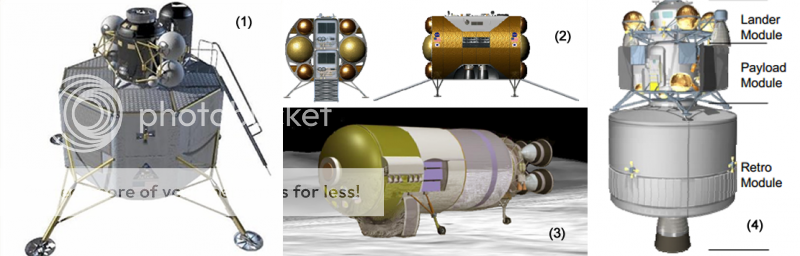

Thus, in 1993 when Starcat’s future was being debated, it was by a team under new management and with altered goals. While throughout ’91 and ’92, Grumman engineers had been studying potential applications of Starcat, including high-altitude hops with the current vehicles with higher-efficiency flight profiles, the potential for adding a small (perhaps also reusable) upper stage to boost research payloads above the Von Karman line, and/or developing the always-intended larger derivative and operating it commercially, Boeing was more interested in making use of the Starcat team’s experience for gaining the Constellation lander contract, and thus did not fight hard to counter SDIO’s intention to terminate the program. Some of the team saw the lunar contract bid and potential to return to Grumman’s spaceflight roots as an intriguing challenge, and were happy to accept the transfer. However, some of the core Starcat devotees both in engineering and operations were put off by what they saw as abandonment of a design of tremendous potential. Several key members of the team thus left Grumman behind in search of others who might be interested in following the trail that Starcat had blazed. In the shutdown, the airframes (which were technically Air Force property) were reclaimed. Starcat Alpha eventually took up residence in the Smithsonian, while Starcat Bravo was transported to Wright-Patterson Air Force Base in Dayton, Ohio and placed on display in the Research and Development Hangar of the National Museum of the United States Air Force. After years warehoused against further disposition, the remaining flight spares and portions of the damaged Alpha aeroshell were acquired by the Cradle of Aviation Museum on Long Island, where (in association with some volunteers from the Starcat team) they were assembled with dummy replica RL-10s to create a display replica, the so-called “Starcat Gamma.”

Last edited: